I had been using ChatGPT and then Bard for ‘inspiration’ when doing some of our Python scripting on newer stuff that I have been learning. It’s like having an over-caffeinated coding pal next to you to hammer out any test code you fancy.

I had some “help” from ChatGPT when learning to connect and read sensor data from a Silicon Labs XG24 Bluetooth/BLE development board and the baseline idea for our team’s further development is the bag.

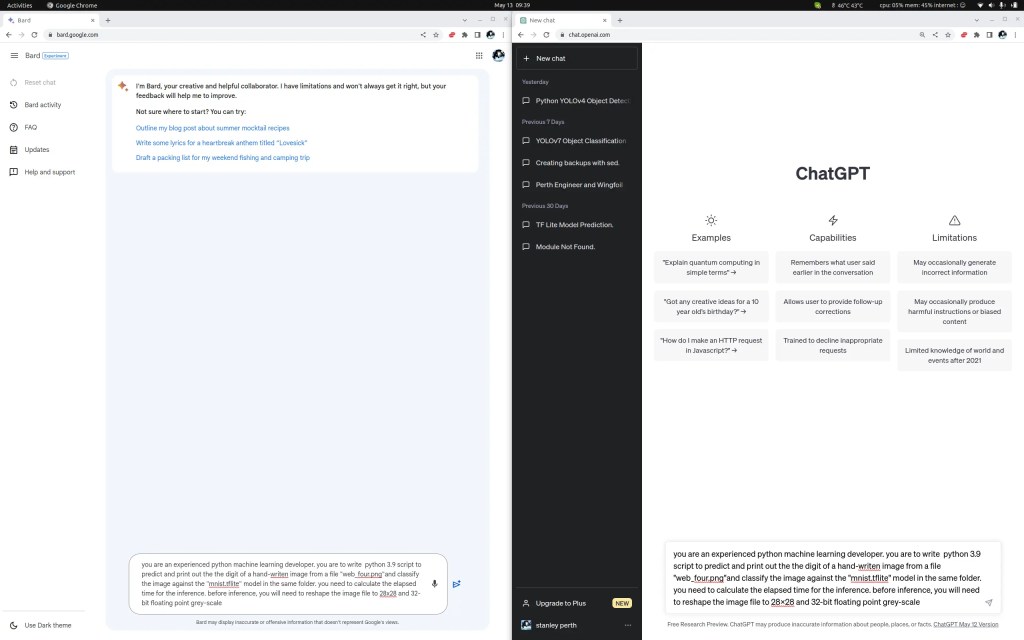

OK, this experiment would be interesting .. I like to see how “correct” the first response from Bard and ChatGPT to write a Python script basically to grab an unknown sized “written digit” image from my drive and predict it against the MNIST database using a tensorflow lite model file that I already prepared. It will also need to calculate elapsed time.

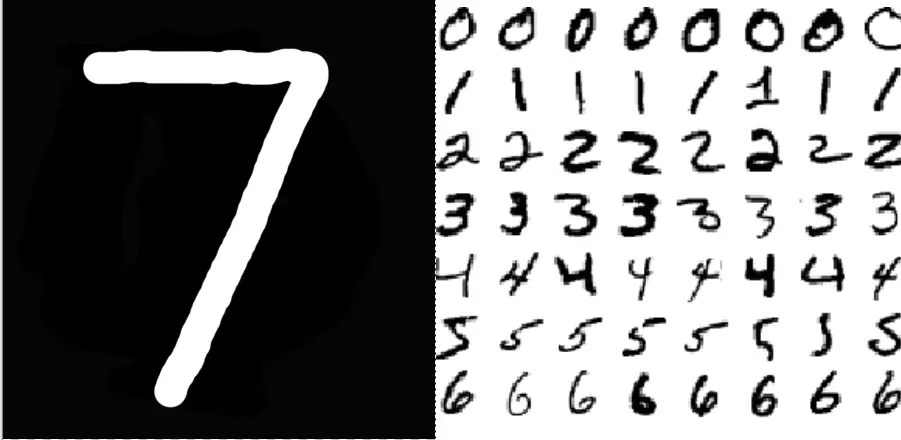

Test digit image vs. the MNIST digit database images

My ‘one-shot’ prompt is ..

you are an experienced python machine learning developer. you are to write python 3.9 script to predict and print out the the digit of a hand-written image from a file “web_four.png”and classify the image against the “mnist.tflite” model in the same folder. you need to calculate the elapsed time for the inference. before inference, you will need to reshape the image file to 28×28 and 32-bit floating point grey-scale

And I unleashed the machines …

A second or two later …

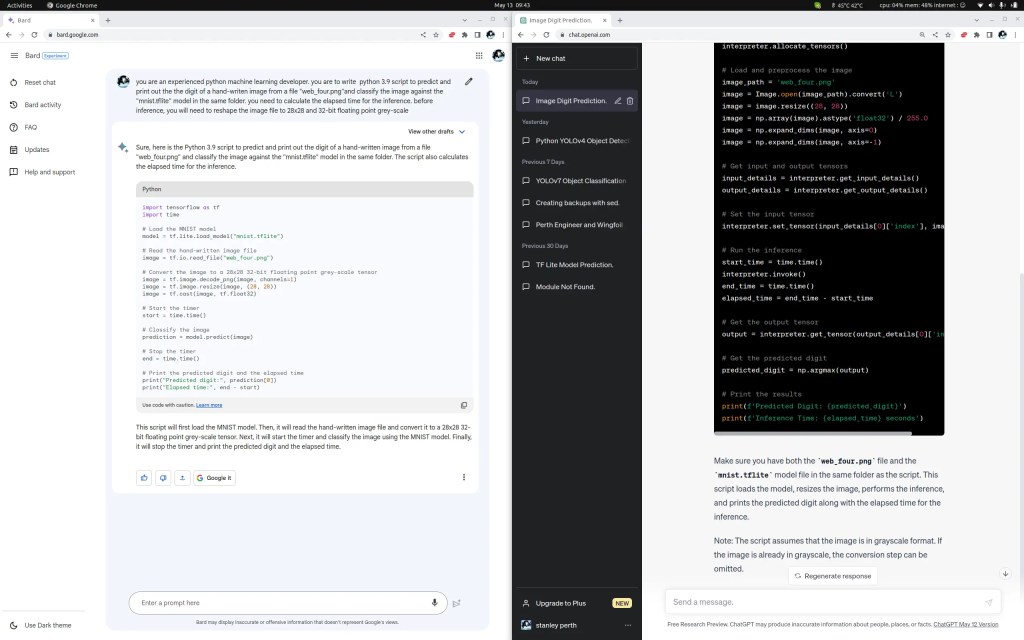

ChatGPT started to ‘write’ line by line while Bard paused a second and off-loaded the whole script onto the screen. I reckon “they” both took about the same time to complete the job. Also some advise to make sure of file location and type etc from each of them.

The script from Bard …

import tensorflow as tf

import time

# Load the MNIST model

model = tf.lite.load_model("mnist.tflite")

# Read the hand-written image file

image = tf.io.read_file("web_four.png")

# Convert the image to a 28x28 32-bit floating point grey-scale tensor

image = tf.image.decode_png(image, channels=1)

image = tf.image.resize(image, (28, 28))

image = tf.cast(image, tf.float32)

# Start the timer

start = time.time()

# Classify the image

prediction = model.predict(image)

# Stop the timer

end = time.time()

# Print the predicted digit and the elapsed time

print("Predicted digit:", prediction[0])

print("Elapsed time:", end - start).. and the code from ChatGPT

import numpy as np

import tensorflow as tf

from PIL import Image

import time

# Load the model

interpreter = tf.lite.Interpreter(model_path='mnist.tflite')

interpreter.allocate_tensors()

# Load and preprocess the image

image_path = 'web_four.png'

image = Image.open(image_path).convert('L')

image = image.resize((28, 28))

image = np.array(image).astype('float32') / 255.0

image = np.expand_dims(image, axis=0)

image = np.expand_dims(image, axis=-1)

# Get input and output tensors

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Set the input tensor

interpreter.set_tensor(input_details[0]['index'], image)

# Run the inference

start_time = time.time()

interpreter.invoke()

end_time = time.time()

elapsed_time = end_time - start_time

# Get the output tensor

output = interpreter.get_tensor(output_details[0]['index'])

# Get the predicted digit

predicted_digit = np.argmax(output)

# Print the results

print(f'Predicted Digit: {predicted_digit}')

print(f'Inference Time: {elapsed_time} seconds')

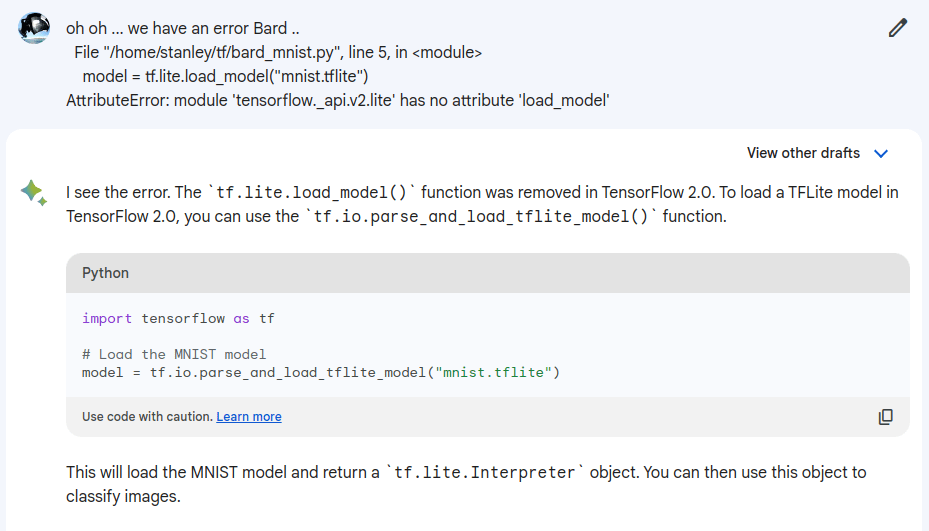

Now judgement time for this experiment .. it’s brutal I guess, one-shot only and loser goes home hhh .. let’s run Bard’s response first

stanley@stanLG:~/tf$ python3 bard_mnist.py

2023-05-13 09:45:56.825094: I tensorflow/core/util/port.cc:110] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

Traceback (most recent call last):

File "/home/stanley/tf/bard_mnist.py", line 5, in <module>

model = tf.lite.load_model("mnist.tflite")

AttributeError: module 'tensorflow._api.v2.lite' has no attribute 'load_model'and ChatGPT’s attempt

stanley@stanLG:~/tf$ python3 chatgpt_mnist.py

2023-05-13 09:46:38.712179: I tensorflow/core/util/port.cc:110] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

Predicted Digit: 6

Inference Time: 0.00041866302490234375 secondsOh! Bard somehow have made a mistake with a module attribute and was not able to delivery a working solution at its first attempt while ChatGPT was spot-on with a working script delivering the required process (even though the prediction was off due to my shabby training …)

So, for now it’s 0-1 with “old man” ChatGPT from OpenAI ahead this round.

Before we go, I gave Bard a chance to redeem “himself” …

.. let’s try the fix from Bard

Traceback (most recent call last):

File "/home/stanley/tf/bard_mnist.py", line 5, in <module>

model = tf.io.parse_and_load_tflite_model("mnist.tflite")

AttributeError: module 'tensorflow._api.v2.io' has no attribute 'parse_and_load_tflite_model'Oh no!!! … not sure where Bard’s been picking his bad habit from this morning but he still stuffed it up. Initially was my fault for not explaining that we are using Tensorflow 2.X (which should be the case for anyone coding today …. ) but sorry “No Pass” Bard this round.

Related posts

Latest posts

- Complete Step by Step : Image Classification with TensorFlow and CNN

- Voice recognition using Tensorflow Lite in nRFS52840 development board

- Tested Bard vs ChatGPT to write a Python script for MNIST digit prediction!

- Edge TPU vs CPU for OpenCV Object Detection on IMX8

- Tensorflow Lite CPU vs TPU. Take Two!